After reading Ruben Penalva’s homonym great post in his blog, and watching the GDC talk Task-based Multithreading — How to Program for 100 Cores I felt inspired to try my own implementation.

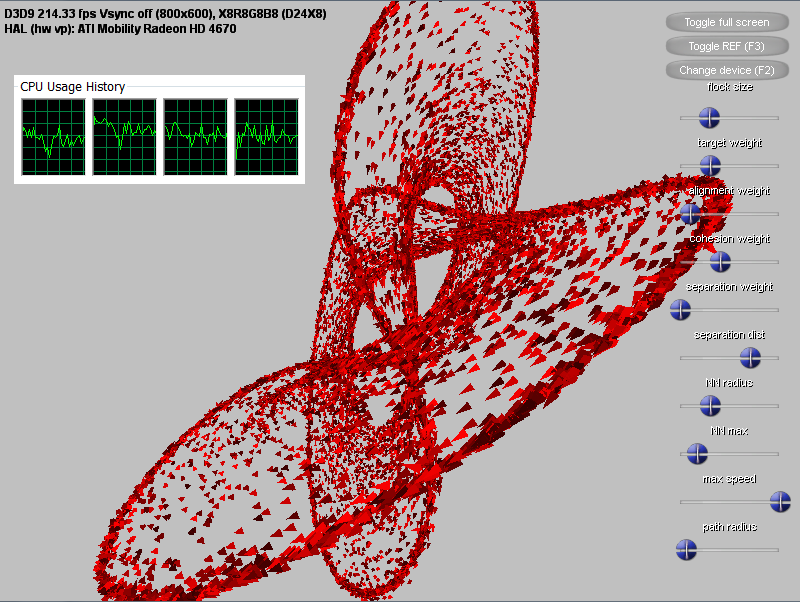

The idea is to implement a flock simulation such as the one described by Craig Reynolds trying to distribute the calculations among all the available hardware threads. An easy way to do so is by using Win32’s support for thread pools, dividing the problem into smaller tasks which can be carried out in parallel. The simulation consists mainly in iterating large arrays of attributes for each entity (position, velocity… ), updating them with the information from nearby entities, which are retrieved by k-nearest neighbour queries. Thus the problem has a trivial paralellization by splitting those arrays by the number of tasks -usually a multiple of the available threads-. In the process of implementhing this demo, however, I found that there are two main factors limiting the throughput:

- The ratio between computation and memory access is low: since in order to update each entity we must query a given number of other entities attributes, memory bandwith becomes a bottleneck which stalls the threads.

- There are parts of my code which are not completely parallel – rendering being the most obvious example: I use DirectX’s hardware instancing to copy a vertex stream defining the shape of the particles many times according to a second vertex stream which contains the positions and orientations. The copy from the CPU-side arrays to the vertex buffer happens sequentially.

- Also I found the kNN queries to become a limiting factor as well (although not as bad as I expected, considering the bottlenecks mentioned above). The brute-force approach for computing distances between each pair of particles works surprisingly well for moderate amounts of particles, but of course it scales poorly. I spent a some time looking up information about dynamic kNN queries, but despite the problem has been studied extensively, the vast majority of the results seem to apply to static data sets, with tree-based spatial structures (if someone reads this and happens to know a good paper, I’d love to hear from it!). So I ended up implementing a simple grid-hashing approach, aiming to reduce the number of queries to the elements contained in the same cell. The grid cells are fairly large, so we can speed things up by updating the solution every X ms, instead of every frame.

With all this, the simulation runs at a decent rate of above 100fps for 50k particles in my laptop. If you want to check it out, you can download the application here (requires Windows Vista or newer and DirectX)